Tricks of the Trade

(with Professor Glesser)

Story Time

Like most everybody who takes calculus, I learned the quotient rule for differentiation:

Or, in song form (sung to the tune of Old McDonald):

Low d-high less high d-low

E-I-E-I-O

And on the bottom, the square of low

E-I-E-I-O

[Note that when sung incorrectly as High d-low less low d-high, the rhyme will not work!]

At some point, I was given an exercise to show that

If you start from this reformulation, it is a simple matter of algebra to get to the usual formulation of the quotient rule. However, a couple of things caught my eye. First, the reformulation seemed much easier to remember: copy the function and then write down the derivative of each function over the function and subtract them; the order is the “natural” one where the numerator comes first.

Story Within A Story Time

Actually, there is a reasonably nice way to remember the order of the quotient rule, at least if you understand the meaning of the derivative. Assume that both the numerator and denominator are positive functions. If the derivative of the numerator is increasing, then the numerator and the quotient are getting bigger faster, so the derivative of the quotient should also be getting bigger, i.e., should have a positive sign in front of it. Similarly, if the derivative of the denominator is increasing, then the denominator is getting bigger faster, which means the quotient is getting smaller faster, and so the derivative of the quotient is decreasing, i.e.,

should have a negative sign in front of it.

Secondly, the appearance of the original function in the answer screams: LOGARITHMIC DIFFERENTIATION. Let’s see why.

If , then

. Differentiating both sides using the chain rule yields

and so the result follows by multiplying both sides by . This is one of my favorite exercises to give first year calculus students—before and after teaching them logarithmic differentiation*.

*Don’t you think that giving out the same problem at different times during the course is an underutilized tactic?

Being a good math nerd, I had to take this further. What if the numerator and denominator are, themselves, a product of functions? Assume that and that

. Setting

, taking the natural logarithm of both sides, and applying log rules, we get:

Differentiating (using the chain rule, as usual) gives:

Multiplying both sides by now gives us the formula:

An immediate example of using this is as follows. Differentiate . The usual way would involve the quotient rule mixed with two applications of the product rule. The alternative is to simply rewrite the function, and to work term by term giving:

which immediately reveals some rather easy simplifications.

But we haven’t used all of the log rules yet! We haven’t used the exponential law. So, let’s assume that each of our and

has an exponent, call them

and

, respectively. In this case, using logarithmic differentiation, we get:

.

Differentiating, we get almost the same formula as above, but with some extra coefficients:

Look back to the example near the top of the post. If we rewrite it with exponents instead of roots, we get:

.

Taking the derivative is now completely straight-forward.

Again, there is some simplifying to be done.

An easier problem is one without a denominator! Let . Normally, one would use the product rule here, but why don’t we try our formula. It gives:

That was pretty painless, while the product rule becomes more tedious as the number of factors in the product increases.

Oh, and if you can’t imagine this being appropriate to teach to students, no less an authority than Richard Feynman encouraged his students to differentiate this way. At the very least, his support gives me the confidence to let you in on my little secret.

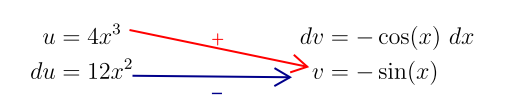

Notice here that we are condensing quite a bit of notation with this method since we are no longer using the u, v, du, and dv notation. But, we are getting out precisely the same information. We draw diagonal left-to-right arrows to indicate which terms multiply and we superscript the arrows with alternating pluses and minuses to give the appropriate sign.

Notice here that we are condensing quite a bit of notation with this method since we are no longer using the u, v, du, and dv notation. But, we are getting out precisely the same information. We draw diagonal left-to-right arrows to indicate which terms multiply and we superscript the arrows with alternating pluses and minuses to give the appropriate sign.