This is the second in my series on techniques I use in the classroom. Many of the things I talk about in this series are well-known, in the sense that at least 50 people know about them. What is certain is that they are not well-known to students. This entry concerns something that I want my college students to have down pat, but on which I expect them to do rather poorly: adding and subtracting fractions.

Tricks of the Trade

(with Professor Glesser)

For simplicity, let me break up addition of fractions into three categories:

- Common Denominators

- One denominator is a multiple of the other

- Other (the algebraist in me wants to write, “

-linearly independent denominators”.)

-linearly independent denominators”.)

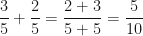

Now, once you get by students wanting to do things like:

(batting average addition)

(batting average addition)

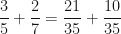

then students catch on pretty quickly that the common denominator case is where they want to be. The second category of problems, exemplified here by

is only slightly more tricky. Any student who has ever needed to make change can figure out why you should think of  as

as  . In any case, I am going to assume that the students in question have mastered categories 1 and 2 (both in recognizing and computing).

. In any case, I am going to assume that the students in question have mastered categories 1 and 2 (both in recognizing and computing).

Let’s up the ante a bit and try a problem like:

I was taught to find common denominators, i.e., find a common multiple of 5 and 7 and multiply each by an appropriate factor to get two fractions with common denominators. Here for instance, we note that the least common multiple of 5 and 7 is 35. Multiplying as follows:

and

and

we get the new (easier) problem  and we all know that the answer is

and we all know that the answer is  . No problem, right? Well, wrong. Students routinely mess this up. First, students are usually to taught to find the least common denominator and this, for many of them, is guess-work. Second, they tend to multiply fractions incorrectly in a way they never do for category 2 problems. Third, it is just too many steps for most of them to remember and/or complete without arithmetic errors. Heck, I even messed up the above problem when I typed it up the first time.

. No problem, right? Well, wrong. Students routinely mess this up. First, students are usually to taught to find the least common denominator and this, for many of them, is guess-work. Second, they tend to multiply fractions incorrectly in a way they never do for category 2 problems. Third, it is just too many steps for most of them to remember and/or complete without arithmetic errors. Heck, I even messed up the above problem when I typed it up the first time.

How the Pros Do It

Let’s take a more general situation:  . We can always find a common denominator by multiplying the denominators¹. So, we get

. We can always find a common denominator by multiplying the denominators¹. So, we get  . Simply put, starting from the original problem, you cross multiply and add to get the numerator and multiply across to get the denominator.

. Simply put, starting from the original problem, you cross multiply and add to get the numerator and multiply across to get the denominator.

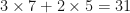

For example,  becomes easy now as the numerator is just

becomes easy now as the numerator is just  and the denominator is

and the denominator is  , so

, so

Pictorially, it looks like:  Teach your students this and it will amaze you how you no longer have to interrupt the flow of a demonstration to add fractions. Show them how useful it is in the kitchen when they need to know that

Teach your students this and it will amaze you how you no longer have to interrupt the flow of a demonstration to add fractions. Show them how useful it is in the kitchen when they need to know that  without the benefit of pencil and paper.

without the benefit of pencil and paper.

What about subtraction?

No problem! A similar derivation shows that to subtract, you simply cross multiply and subtract to get the numerator and multiply to get the denominator. For example,

The biggest problem students face when doing subtraction is remembering which order you subtract (it didn’t matter for addition!) If you always start with the down-right arrow, there is no issue, though. It’s amazing. I’ve taught this to students who could hardly subtract fractions on paper, but after learning this trick, would do the problems in their head as fast as I can.

What about algebra?

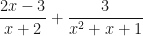

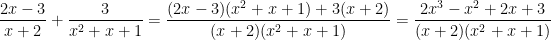

It is really convenient with many algebra problems. For instance,  gives a lot of students fits as they can’t see how to find a common denominator. But, using this method:

gives a lot of students fits as they can’t see how to find a common denominator. But, using this method:

.

.

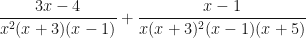

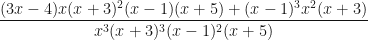

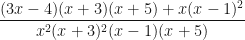

To be sure, the arithmetic is still hairy, but there is less writing than before and the student doesn’t have to think about this part, they can just do it. Now there are some cases where this method will result in some cancelling work. For example:

.

.

A student should learn to recognize that it is much easier to multiply the first fraction by  and the second by

and the second by  and then adding. But, if they are just getting started, as long as they aren’t too overzealous about distributing, they will obtain using this method

and then adding. But, if they are just getting started, as long as they aren’t too overzealous about distributing, they will obtain using this method

and the cancellation is not too hard to find, leaving  .

.

Is it really a trick?

No, not really. The formula  actually defines fraction addition. In fact, if you’re interested in a little abstract algebra, hold on to your hats.

actually defines fraction addition. In fact, if you’re interested in a little abstract algebra, hold on to your hats.

For the advanced reader who isn’t afraid of a little jargon

There is a lovely theorem in algebra saying that every integral domain can be embedded in a field. Huh? You ate what now?

All right, let’s break it down.

AG: An integral domain is just a commutative ring where…

Poor Reader: Whoa whoa whoa. Hold on just a second, there, professor. A what?

AG: Ah, yes, a commutative ring. It is a ring that is commutative.

PR: (eyes rolling) Yes, brilliant. What the heck is a ring? (looks nervously at his or her wedding band)

AG: It is an abstract structure composed of a non-empty set and two binary operations…um, long story short, it is a set where you can add and multiply and the distributive laws hold. The only real caveat is that sometimes multiplication isn’t commutative. That means that sometimes  . Matrix multiplication is like this. In fact, the set of

. Matrix multiplication is like this. In fact, the set of  matrices forms a ring since you can add and multiply them as usual.

matrices forms a ring since you can add and multiply them as usual.

PR: Riiiigggghhttt (eyes are glazed over).

AG: A commutative ring is where you don’t have the multiplication problem. Then everything works just the way you want it to.

PR: Okay, let’s say for a moment I understand what you’re talking about. What is this integral domain thing then?

AG: Good question. Remember how you solve  ? You factor to get

? You factor to get  and then conclude that

and then conclude that  or

or  . How do you conclude that?

. How do you conclude that?

PR: Well, we know that if  then

then  or

or  .

.

AG: Precisely. Any commutative ring that has that property, we call an integral domain.

PR: That is a stupid name. I think I could do better.

AG: Yes, yes, you’re very smart; now, shut up! The theorem is that every integral domain can be embedded in a field. A field is just an integral domain where you can divide by anything other than 0 (Even in abstract algebra, except in 1 case, we are never, ever, allowed to divide by 0).

PR: What do you mean by embedded?

AG: It is a bit technical. Strictly speaking, it means that there is an injective ring homomorphism from the integral domain into a field. But just think about how every integer is also a rational number.

PR: Rational number?

AG: I’m sorry: a fraction. See if I have the integer 4, I can think of it as  and then it is a fraction. This is how we embed the integers into the rational numbers, which are a field since you can divide by fractions.

and then it is a fraction. This is how we embed the integers into the rational numbers, which are a field since you can divide by fractions.

PR: You can divide by integers too. Aren’t they a field?

AG: No, because if you divide by an integer, you don’t normally get an integer. Fields have to be closed. By that, I mean that when you divide, the answer is still in the field.

PR: Hmmm. I don’t think I understand this.

AG: That’s okay. If you could really learn it in a blog post, you wouldn’t have to take an entire year of it in graduate school. The point of all this is to get at the following. To build a field from an integral domain, you take the elements of the integral domain and start writing down all fractions from those elements. You need to then explain how to add and multiply those fractions.² The answer is to define these as

and

,

which is precisely how you do it normally. Now, there is a lot more to proving the theorem. There are issues of showing this is well-defined, that the usual laws of arithmetic (e.g., associativity, commutativity, distributivity) hold and that you can produce an injective ring homomorphism. This shows though, that it is not just more computationally efficient to add this way, but that when we don’t show it, we are actually missing out on a core ingredient of a key theorem in abstract algebra.

PR: (snoring)…hmm, what, oh yes, that is a shame. Did you check your pocket for the key?

________________________________________________________________________________

¹ This will likely not give you the least common denominator, but the only cost is that you’ll have to do some cancellation at the end.

² You also need to define when two fractions are the same:  if and only if

if and only if  .

.

should have a positive sign in front of it. Similarly, if the derivative of the denominator is increasing, then the denominator is getting bigger faster, which means the quotient is getting smaller faster, and so the derivative of the quotient is decreasing, i.e.,

should have a negative sign in front of it.

, then

. Differentiating both sides using the chain rule yields

. This is one of my favorite exercises to give first year calculus students—before and after teaching them logarithmic differentiation*.

and that

. Setting

, taking the natural logarithm of both sides, and applying log rules, we get:

now gives us the formula:

. The usual way would involve the quotient rule mixed with two applications of the product rule. The alternative is to simply rewrite the function, and to work term by term giving:

and

has an exponent, call them

and

, respectively. In this case, using logarithmic differentiation, we get:

.

.

. Normally, one would use the product rule here, but why don’t we try our formula. It gives:

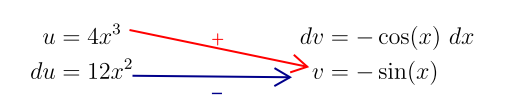

Notice here that we are condensing quite a bit of notation with this method since we are no longer using the u, v, du, and dv notation. But, we are getting out precisely the same information. We draw diagonal left-to-right arrows to indicate which terms multiply and we superscript the arrows with alternating pluses and minuses to give the appropriate sign.

Notice here that we are condensing quite a bit of notation with this method since we are no longer using the u, v, du, and dv notation. But, we are getting out precisely the same information. We draw diagonal left-to-right arrows to indicate which terms multiply and we superscript the arrows with alternating pluses and minuses to give the appropriate sign.